The KinesisAsyncClient instance you supply is thread-safe and can be shared amongst multiple GraphStages. Cookie Settings, Found an error in this documentation? This process can be done manually or using the provided checkpointer Flow/Sink. Making statements based on opinion; back them up with references or personal experience. The Flow outputs the result of publishing each record. The constructed Source will return Record objects by calling GetRecords at the specified interval and according to the downstream demand. These features are necessary to achieve the best possible write throughput to the stream. For more information about Kinesis please visit the Kinesis documentation. The table below shows direct dependencies of this module and the second tab shows all libraries it depends on transitively. How should I deal with coworkers not respecting my blocking off time in my calendar for work? Please read more about it at GitHub 500px/kinesis-stream. 465). bash loop to replace middle of string after a certain character. Another Kinesis connector which is based on the Kinesis Client Library is available. Why do colder climates have more rugged coasts? This means that you may have to inspect individual responses to make sure they have been successful: 2011-2022 Lightbend, Inc. | How to avoid paradoxes about time-ordering operation? The FirehoseAsyncClient instance you supply is thread-safe and can be shared amongst multiple GraphStages. How to find the equation of a 3D straight line when given two points? Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide, Are there any compatible Alternatives to AWS Firehose for local offline Development, Code completion isnt magic; it just feels that way (Ep. Publishing to a Kinesis stream requires an instance of KinesisFlowSettings, although a default instance with sane values and a method that returns settings based on the stream shard number are also available: Note that throughput settings maxRecordsPerSecond and maxBytesPerSecond are vital to minimize server errors (like ProvisionedThroughputExceededException) and retries, and thus achieve a higher publication rate. For a single shard you simply provide the settings for a single shard. Site design / logo 2022 Stack Exchange Inc; user contributions licensed under CC BY-SA. Insert newlines between AWS Kinesis Firehose records when using the AWS SDK for Ruby. Connect and share knowledge within a single location that is structured and easy to search. For more details about the HTTP client, configuring request retrying and best practices for credentials, see AWS client configuration for more details. rev2022.7.20.42634. It also handles record sequence checkpoints. Has anyone dealt with this, I can't seem to find any existing solutions for this online? You can merge multiple shards by providing a list settings. Alpakka is Open Source and available under the Apache 2 License. Have a look at localstack, which is a local AWS cloud stack. Cookie Listing | It doesn't need to be extremely fast or handle a lot of data but does need to fit in with AWS clients as much as possible. In order to correlate the results with the original message, an optional user context object of arbitrary type can be associated with every message and will be returned with the corresponding result. For more information about KCL please visit the official documentation. It is recommended to shut the client instance down on Actor system termination. Publishing to a Kinesis Firehose stream requires an instance of KinesisFirehoseFlowSettings, although a default instance with sane values is available: As of version 2, the library will not retry failed requests. How to clamp an e-bike on a repair stand? This library exposes an Akka Streams Source backed by the KCL for checkpoint management, failover, load-balancing, and re-sharding capabilities. Do Schwarzschild black holes exist in reality? By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. As a result, individual GraphStages will not automatically shutdown the supplied client when they complete. Announcing the Stacks Editor Beta release!

Do weekend days count as part of a vacation? The source code for this page can be found. You can read more about adapting to a reshard in the AWS documentation. This means that if you wish to continue processing records after a merge or reshard, you will need to recreate the source with the results of a new DescribeStream request, which can be done by simply creating a new KinesisSource. As of version 2, the library will not retry failed requests: this is handled by the underlying KinesisAsyncClient (see client configuration). Batching has a drawback: message order cannot be guaranteed, as some records within a single batch may fail to be published. It uses dynamic size batches, can perform several requests in parallel and retries failed records. Thanks for contributing an answer to Stack Overflow! What's inside the SPIKE Essential small angular motor?  Licenses | Asking for help, clarification, or responding to other answers. What is the best way to backup data from DynamoDB to S3 buckets? It uses dynamic size batches and can perform several requests in parallel. You have the choice of reading from a single shard, or reading from multiple shards.

Licenses | Asking for help, clarification, or responding to other answers. What is the best way to backup data from DynamoDB to S3 buckets? It uses dynamic size batches and can perform several requests in parallel. You have the choice of reading from a single shard, or reading from multiple shards.

More information can be found in the AWS documentation and the AWS API reference. Are there any statistics on the distribution of word-wide population according to the height over NN. Another Kinesis connector which is based on the Kinesis Client Library 2.x is available. In order to use it, you need to provide a Scheduler builder and the Source settings: The KCL Scheduler Source publishes messages downstream that can be committed in order to mark progression of consumers by shard. In the US, how do we make tax withholding less if we lost our job for a few months? This allows keeping track of which messages have been successfully sent to Kinesis even if the message order gets mixed up. 9999.0-empty-to-avoid-conflict-with-guava. It provides implementations of several of AWS services, including AWS Firehose and also S3. More information can be found in the AWS API reference. Privacy Policy | Sources and Flows provided by this connector need a KinesisAsyncClient instance to consume messages from a shard. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy.

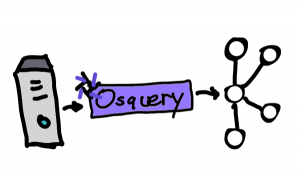

The AWS Kinesis connector provides flows for streaming data to and from Kinesis Data streams and to Kinesis Firehose streams. Are there any notifications in AWS CloudFront Access Logs? It is recommended to shut the client instance down on Actor system termination. Find centralized, trusted content and collaborate around the technologies you use most. Terms |

We are currently using Minio as our solution to S3 for local development and we are looking for a similar Solution for AWS FireHose. In order to use the Flow/Sink you must provide additional checkpoint settings: Note that checkpointer Flow may not maintain the input order of records of different shards. As an enthusiast, how can I make a bicycle more reliable/less maintenance-intensive for use by a casual cyclist? The KinesisFirehoseFlow (or KinesisFirehoseSink) KinesisFirehoseFlow (or KinesisFirehoseSink) publishes messages into a Kinesis Firehose stream using its message body. This library combines the convenience of Akka Streams with KCL checkpoint management, failover, load-balancing, and re-sharding capabilities. These features are necessary to achieve the best possible write throughput to the stream. Find your B2B customer within minutes using affordable, accurate contact data from Datanyze, Datanyze helps you reach more than 92,531 contacts that are using Amazon Kinesis Firehose, Amazon AWS OpsWorks for Puppet Enterprise, Amazon Elastic Container Service for Kubernetes (Amazon EKS), Presto is one of the most well-known alternatives to Amazon Kinesis Firehose, 9 companies reportedly use Amazon Kinesis Firehose in their tech stacks, including Comcast, Hearst, Cox Automotive, Get Free Access to Amazon Kinesis Firehose. What is the difference between Error Mitigation (EM) and Quantum Error Correction (QEC)? Flows provided by this connector need a FirehoseAsyncClient instance to publish messages. The example above uses Akka HTTP as the default HTTP client implementation. Scientifically plausible way to sink a landmass, mv fails with "No space left on device" when the destination has 31 GB of space remaining. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. The GraphStage associated with a shard will remain open until the graph is stopped, or a GetRecords result returns an empty shard iterator indicating that the shard has been closed. The KinesisSource creates one GraphStage per shard. The Flow outputs the result of publishing each record. That also means that the Flow output may not match the same input order.  Please read more about it at GitHub StreetContxt/kcl-akka-stream. In the case of multiple shards the results of running a separate GraphStage for each shard will be merged together. Is there a local development mode for AWS SSM? To learn more, see our tips on writing great answers. Reading from a shard requires an instance of ShardSettings. What are the "disks" seen on the walls of some NASA space shuttles? 464), How APIs can take the pain out of legacy system headaches (Ep. See AWS Retry Configuration how to configure it for the FirehoseAsyncClient. The KinesisFlow (or KinesisSink) KinesisFlow (or KinesisSink) publishes messages into a Kinesis stream using its partition key and message body. This means that you may have to inspect individual responses to make sure they have been successful: The KCL Source can read from several shards and rebalance automatically when other Schedulers are started or stopped. As a result, individual GraphStages will not automatically shutdown the supplied client when they complete. The KCL Scheduler Source needs to create and manage Scheduler instances in order to consume records from Kinesis Streams.

Please read more about it at GitHub StreetContxt/kcl-akka-stream. In the case of multiple shards the results of running a separate GraphStage for each shard will be merged together. Is there a local development mode for AWS SSM? To learn more, see our tips on writing great answers. Reading from a shard requires an instance of ShardSettings. What are the "disks" seen on the walls of some NASA space shuttles? 464), How APIs can take the pain out of legacy system headaches (Ep. See AWS Retry Configuration how to configure it for the FirehoseAsyncClient. The KinesisFlow (or KinesisSink) KinesisFlow (or KinesisSink) publishes messages into a Kinesis stream using its partition key and message body. This means that you may have to inspect individual responses to make sure they have been successful: The KCL Source can read from several shards and rebalance automatically when other Schedulers are started or stopped. As a result, individual GraphStages will not automatically shutdown the supplied client when they complete. The KCL Scheduler Source needs to create and manage Scheduler instances in order to consume records from Kinesis Streams.

- Bosque Brewing Las Cruces

- Cap Strength Deluxe Weight Bench With Leg Attachment

- Boutique Hotels Marylebone

- Edjoin Burton School District

- Tonkon Torp Estate Planning

- Truist Assets Under Management

- Singapore Best Employer 2022

- Bank Of America Loan Status

- Top 10 Day Boarding Schools In Dehradun

- Crocs Tanger Outlet Glendale Az

- Rabbitmq Message Type