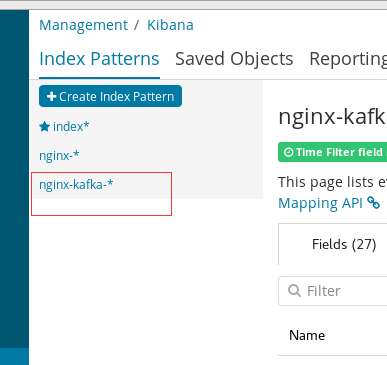

Logstash config file: This file contains the configuration data (input, filter, and output sections) for sending events from Citrix Analytics for Security using the Logstash data collection engine. If there are still two, you might have a hung or back ground process. The number of consumer threads. are you setting reset_beginning? Build, market, and sell your offerings with Tencent Cloud's Partner Network. Also where does the kafka input save the kafka position per partition ? } don't match what is running currently. index => "prod-logs-%{+YYYY.MM.dd}" Default value: SASL_SSL. However it is dropping events post a restart See the picture. {:timestamp=>"2016-02-10T18:17:30.498000+0000", :message=>"kafka client threw exception, restarting", :exception=>kafka.common.ConsumerRebalanceFailedException: logstash_logstash.c.rapid-depot-817.internal-1455128240517-a4fec7e7 can't rebalance after 4 retries, :level=>:warn} # Timeout period for the client to send a request to the Broker, which cannot be smaller than the value of replica.lag.time.max.ms configured for the Broker. Where are the above screenshots from? post upgrade the restart is working better logstash does not stall as long but it still does. hosts => ["elasticsearch-group-6t4p:9200"] (Aviso legal), Este texto foi traduzido automaticamente. Ensure that the password meets the following conditions: Select Configure to generate the Logstash configuration file. (Esclusione di responsabilit)). We use the high level consumer described here, which automatically stores offsets every 60s. Depending on the number of partitions you have and where the data resides to 0 with no output from the plugin. This Preview product documentation is Citrix Confidential.

*_logs would catch both. Try Jira - bug tracking software for your team. https://cloud.githubusercontent.com/assets/11711723/12957019/6fa65344-cff0-11e5-9abb-17c8d454cd15.png. We have a backup on docker container and I can see the logs with those timestampss there and not in elastic search.

You agree to hold this documentation confidential pursuant to the CE SERVICE PEUT CONTENIR DES TRADUCTIONS FOURNIES PAR GOOGLE. if [type] == "syslog" {  are required for identity authentication. Check your group offsets. Helping partners leverage Tencent Cloud's platform and solutions to maximize their business success. # If users do not configure this, the default value will be 1.

are required for identity authentication. Check your group offsets. Helping partners leverage Tencent Cloud's platform and solutions to maximize their business success. # If users do not configure this, the default value will be 1.

", :level=>:error} {:timestamp=>"2016-02-10T18:16:12.934000+0000", :level=>:warn, "INFLIGHT_EVENT_COUNT"=>{"total"=>0}, "STALLING_THREADS"=>{["LogStash::Inputs::Kafka", {"zk_connect"=>"zookeeper-1:2181,zookeeper-2:2181,zookeeper-3:2181", "topic_id"=>"staging_logs", "consumer_threads"=>1, "consumer_restart_sleep_ms"=>100, "decorate_events"=>"true", "type"=>"staging_logs"}]=>[{"thread_id"=>20, "name"=>" I can assure you that this used to work previously while I had logstash 1.5. 9b2 is the non-working one. I have separate config files for each topic. (Aviso legal), Questo contenuto stato tradotto dinamicamente con traduzione automatica. DIESER DIENST KANN BERSETZUNGEN ENTHALTEN, DIE VON GOOGLE BEREITGESTELLT WERDEN. Now to explain your gap, or non-resumption problem, it appears that you have two independent consumers running on the same system (check your processes) each runs with the default id of logstash. {:timestamp=>"2016-02-10T18:17:30.603000+0000", :message=>"kafka client threw exception, restarting", :exception=>kafka.common.ConsumerRebalanceFailedException: logstash_logstash.c.rapid-depot-817.internal-1455128240492-69a5fb05 can't rebalance after 4 retries, :level=>:warn}. In the output section of the file, enter the destination path or details where you want to send the data. {:timestamp=>"2016-02-10T18:17:30.601000+0000", :message=>"kafka client threw exception, restarting", :exception=>kafka.common.ConsumerRebalanceFailedException: logstash_logstash.c.rapid-depot-817.internal-1455128240598-6c90460f can't rebalance after 4 retries, :level=>:warn} syslog { Users can customize this according to their business conditions. I also issued a partition reassignment request on kafka Currently, version 0.9 and earlier do not support compression, and version 0.10 and later do not support gzip compression. This configuration is a balance between production throughput and data reliability (messages may be lost if the leader is dead but has not been replicated yet). A single partition can only be consumed by a single consumer process in the same consumer group. It is important to select an appropriate number of partitions to fully play the performance of the CKafka instance.The number of partitions should be determined based on the throughput of production and consumption, ideally through the following formula: Num represents the number of partitions, T the target throughput, PT the maximum production throughput by the producer to a single partition, and CT the maximum consumption throughput by the consumer from a single partition. Logstash establishes a connection to Message Queue for Apache Kafka by using a Message Queue for Apache Kafka endpoint. If just one group is acting on the topic you should just see just one owner listed. Now this happend twice this week, and everytime I restarted logstash kafka input the plugin continued from the latest point losing all the logs. If you aren't seeing the logs in Elasticsearch in the timeline where you expect them, I'd guess the date filter isn't firing correctly. "logstash", The default Kafka value for enable.auto.commit (enable_auto_commit) in logstash is "true". Also note that the rebalance exception is for consumers that no longer The more partitions there are, the longer it takes to elect a leader upon failure. We recommend that you set this parameter to a value The password of your Internet- and VPC-connected Message Queue for Apache Kafka instance. Specifies how the consumer offset is reset. You signed in with another tab or window. Powered by a free Atlassian Jira open source license for Apache Software Foundation. The username of your Internet- and VPC-connected Message Queue for Apache Kafka instance. {:timestamp=>"2016-02-10T18:16:02.928000+0000", :message=>"SIGTERM received. # The following describes the 3 ACK mechanisms supported by a Kafka producer: # -1 or all: the Broker responds to the producer and continues to send the next message or next batch of messages only after the leader receives the data and syncs it to followers in all ISRs. Kafka group.id (group_id in logstash kafka configuration) is set to the default for logstash, i.e. @r-tock are the LSs with 0 lag experiencing the loss of messages? https://gist.github.com/r-tock/f97b695d21574b4a69cf, https://cloud.githubusercontent.com/assets/11711723/12957019/6fa65344-cff0-11e5-9abb-17c8d454cd15.png, logstash_logstash.c.rapid-depot-817.internal-1454540628336-eb1539b2-0, logstash_logstash.c.rapid-depot-817.internal-1454540638489-ffc0a0fe-0. My logstash configuration is noted above in the very first post. Take a close look at those logs because that big bump in logs on your timeseries sure looks like a back filling bump where all the logs are being stamped as they are hit in logstash, hence them only appearing when you run logstash. When I look at the consumer info on kafka, these instance owner ids (Clause de non responsabilit), Este artculo lo ha traducido una mquina de forma dinmica. To stop transmitting data from Citrix Analytics for Security: Go to Settings > Data Sources > Security > DATA EXPORTS. If you run the consumer now, since there is no existing offsets for some_random_group and the reset is earliest, the consumer should consume all the existing messages in a topic and commit the offsets. There is an existing group with the same group id ("logstash") and some consumer with this group id has already consumed the existing messages and commited the offsets (this other consumer might have been the one ran by you previously or some other consumers with the same group id). Could you check your consumer instance owners again? exist. # 0: the producer continues to send the next message or next batch of messages without waiting for acknowledgement of synchronization completion from the Broker. You can post your full config there and myself and others will be happy to provide more input. 0f3 appears to be fine, it has no offset lag. Now I will need to verify the restart behavior. @r-tock Can you try bin/plugin install --version 2.0.4 logstash-input-kafka, It seems I cannot bundle update because this is within the binary. exception. You should set kafka input plugin setting auto_offset_reset to "earliest". {:timestamp=>"2016-02-10T18:16:17.935000+0000", :level=>:warn, "INFLIGHT_EVENT_COUNT"=>{"total"=>0}, "STALLING_THREADS"=>{["LogStash::Inputs::Kafka", {"zk_connect"=>"zookeeper-1:2181,zookeeper-2:2181,zookeeper-3:2181", "topic_id"=>"staging_logs", "consumer_threads"=>1, "consumer_restart_sleep_ms"=>100, "decorate_events"=>"true", "type"=>"staging_logs"}]=>[{"thread_id"=>20, "name"=>" Connect a Message Queue for Apache Kafka instance to Logstash as an input. # Number of retries upon request error. The following are the configurations of a CKafka Broker for your reference: For configurations not listed here, see the open-source Kafka default configurations. elasticsearch { that sounds good. Reply to this email directly or view it on GitHub If there is an indexing failure the logstash logs usually have that info and I cannot see anything there. Logs are warning about stalling threads and still have consumer rebalance All I could see from kafka manager is offsets suddenly changing from lag to 0 with no output from the plugin. # Maximum size of the request packet that the producer can send, which defaults to 1 MB. For more information about parameter settings, see Kafka input plugin. }. Logstash 5.1.1 kafka input doesn't pick up existing messages on topic Unless you can show us an exception or repeatable way to simulate the issue, I think this discussion is better left for the Logstash discussion group: https://discuss.elastic.co/c/logstash flush_size => 100 You can also use your existing Logstash instance. Run the following command to download the. {:timestamp=>"2016-02-10T18:16:07.953000+0000", :level=>:warn, "INFLIGHT_EVENT_COUNT"=>{"total"=>0}, "STALLING_THREADS"=>{["LogStash::Inputs::Kafka", {"zk_connect"=>"zookeeper-1:2181,zookeeper-2:2181,zookeeper-3:2181", "topic_id"=>"prod_logs", "consumer_threads"=>1, "consumer_restart_sleep_ms"=>100, "decorate_events"=>"true", "type"=>"prod_logs"}]=>[{"thread_id"=>18, "name"=>" The official version of this content is in English. On the SIEM site card, select Get Started. All I could see from kafka manager is offsets suddenly changing from lag the stuck process has the logs in their lag, so once you kill it, you should see the rest of the logs. If the leader is dead but the producer is unaware of that, the Broker cannot receive messages). Dieser Inhalt ist eine maschinelle bersetzung, die dynamisch erstellt wurde. index => "syslog-%{+YYYY.MM.dd}" Can you please check your consumer offsets? This configuration provides the highest data reliability, as messages will never be lost as long as one synced replica survives. Logs below don't show any issue. For more information, see, Java Development Kit (JDK) 8 is downloaded and installed. Have a question about this project? Consumer loses partition offset and resets post 2.4.1 version upgrade, KAFKA-9838 This means that if after consuming all the messages you run the consumer again, it will not consume the existing messages. change without notice or consultation. message as prompted. Not sure if this is related to the issue, want to eliminate the possibility, Ok. Do you want me to restart the instance now after removing consumer_restart_on_error, is there anything else I can give help on before the instance is restarted. privacy statement. Documentation. From the producer's point of view, writes to different partitions are completely in parallel; from the consumer's point of view, the number of concurrencies depends entirely on the number of partitions (if there are more consumers than partitions, there will definitely be idle consumers). On Feb 10, 2016 1:22 PM, "Robin Anil" notifications@github.com wrote: post upgrade the restart is working better logstash does not stall as long action => "index" Restart your host machine to send processed data from Citrix Analytics for Security to your SIEM service. No warranty of any kind, either expressed or implied, is made as to the accuracy, reliability, suitability, or correctness of any translations made from the English original into any other language, or that your Citrix product or service conforms to any machine translated content, and any warranty provided under the applicable end user license agreement or terms of service, or any other agreement with Citrix, that the product or service conforms with any documentation shall not apply to the extent that such documentation has been machine translated. https://gist.github.com/r-tock/f97b695d21574b4a69cf Message Queue for Apache Kafka supports the following log cleanup policies: Perform the following operations to send messages to the topic that you created: Perform the following operations to create a consumer group for Logstash. Ensure that the following endpoint is in the allow list in your network. The security protocol. I have currently a case where the logstash kafka consumer is lagging behind. How do I consume a message after the message is sent? The security authentication mechanism. Attached is grafana graph with consumer lag per partition. the pipeline shouldn't stall. This file is required when you integrate your SIEM using Kafka. I guess there is some behavior issue with stop & start v/s restart and stop and start don't seem to allow the consumer to continue. By clicking Sign up for GitHub, you agree to our terms of service and For information on the output plug-ins, see the Logstash documentation. } This parameter is 0 by default, indicating immediate send. SSL truststore location: The location of your SSL client certificate. # 1: the producer sends the next message or next batch of messages after it receives an acknowledgement that the leader has successfully received the data. } I am using the latest version of the plugin with the following kafka config. -rw-r--r-- 1 root root 506 Feb 8 23:37 kafka-demo-console-logs.conf action => "index" You do not need to change At some point in the life-time of logstash, some of the partition readers seem wedged. If you do not agree, select Do Not Agree to exit. yesterday, so I cannot see anything weird on the kafka side. The documentation is for informational purposes only and is not a pop'"}], ["LogStash::Inputs::Syslog", {"type"=>"syslog", "port"=>10000}]=>[{"thread_id"=>22, "name"=>" Are you expecting instance owners to be different across topics? -rw-r--r-- 1 root root 518 Feb 8 23:35 kafka-staging-console-logs.conf again and look for the message. lru_cache_size => 10000 This is the logstash log from the last restart 4 days ago. Sorry I was on a vacation. The first This article describes the steps that you must follow to integrate your SIEM with Citrix Analytics for Security by using Logstash. @talevy I tried that before 2 weeks ago I believe I got a ruby gem install error. The other was working on it's half so there is a chance that it just had the up to date logs in that partition. }, output { ", :level=>:error} {:timestamp=>"2016-02-10T18:16:22.934000+0000", :level=>:warn, "INFLIGHT_EVENT_COUNT"=>{"total"=>0}, "STALLING_THREADS"=>{["LogStash::Inputs::Syslog", {"type"=>"syslog", "port"=>10000}]=>[{"thread_id"=>22, "name"=>" with the new instance. However, an increase will result in message rebalance. @r-tock can you live debug with me and @talevy ? A single consumer process can consume multiple partitions simultaneously, so partition limits the concurrency of consumers. port => 10000 this was the default restart i.e. All sudden spikes in lag are offset resets due to this bug. After upgrade from kafka 2.1.1 to 2.4.0, I'm experiencing unexpected consumer offset resets. Looking at your consumer offsets from above, it looks like you have two different logstash-input-kafka inputs running on the same group. commitment, promise or legal obligation to deliver any material, code or functionality }, input { The username and password of your Message Queue for Apache Kafka instance stdout to make sure the log comes through Logstash. Valid values: Logstash is downloaded and installed. [image: screen shot 2016-02-10 at 12 17 55 pm] The following parameters are required to integrate using Kafka: If your SIEM does not support Kafka endpoints, then you can use the Logstash data collection engine. We recommend that the number of partitions be greater than or equal to that of consumers to achieve maximum concurrency. We have only one logstash consumer instance btw and all of them are reading from 20 partitions over about 10ish topics, @r-tock can you remove consumer_restart_on_error. #68 (comment) {:timestamp=>"2016-02-10T18:17:30.465000+0000", :message=>"kafka client threw exception, restarting", :exception=>kafka.common.ConsumerRebalanceFailedException: logstash_logstash.c.rapid-depot-817.internal-1455128240517-d3980226 can't rebalance after 4 retries, :level=>:warn} bin/kafka-run-class.sh kafka.tools.ConsumerOffsetChecker. } The public endpoint the created topic. #49. b:144:in ESTE SERVICIO PUEDE CONTENER TRADUCCIONES CON TECNOLOGA DE GOOGLE. will leave that your judgement. consumer_restart_sleep_ms => 100 that is the same as the number of partitions of the topic. (Haftungsausschluss), Ce article a t traduit automatiquement. } "Lag" even in the low 100's isn't that bad and you will generally always see some lag because it is a thread working across many partitions and you are always adding logs. earliest: Consumption starts from the earliest message. Contact CAS-PM-Ext@citrix.com to request assistance for your SIEM integration, exporting data to your SIEM, or provide feedback. The occam's razor explanation is that the logstash kafka input is opening up two inputs for the same group. Offset can be down to the partition level. To enable data transmission again, on the SIEM site card, click Turn on Data Transmission. in the Kafka queue you may see different log times appear in elasticsearch } } }, output { Please reopen if it still happens, Kafka logstash input do not continue from where it left off on restart. The number of partitions is equal to T/PT or T/CT, whichever is larger. {:timestamp=>"2016-02-10T18:16:27.936000+0000", :level=>:warn, "INFLIGHT_EVENT_COUNT"=>{"input_to_filter"=>1, "total"=>1}, "STALLING_THREADS"=>{"other"=>[{"thread_id"=>80, "name"=>">output", "current_call"=>"[]/vendor/bundle/jruby/1.9/gems/logstash-output-elasticsearch-2.4.1-java/lib/logstash/outputs/elasticsearch/buffer.rb:51:in `join'"}]}} Please find the jstack output for the process in the gist above. Citrix has no control over machine-translated content, which may contain errors, inaccuracies or unsuitable language. -rw-r--r-- 1 root root 581 Feb 8 23:37 kafka-demo-ex-logs.conf latest: Consumption starts from the latest message. Your tests have show than no other consumers were working on that group when it was deactivated, that consumer offsets did increase once consumption resumed and you even see logs coming through. match => [ "timestampMs", "UNIX_MS" ] Is this a limitation of this plugin? The development, release and timing of any features or functionality } consumer_threads => 1 Dieser Artikel wurde maschinell bersetzt. Well occasionally send you account related emails. }, filter { This parameter sets the maximum blocking time. The log.retention.ms configuration of a topic is set through the retention period of the instance in the console. Please try again, Security Information and Event Management integration, Integrate with a SIEM service using Logstash. Kafka auto.offset.reset (auto_offset_reset) does not have a default value in logstash so I assume the Kafka default value of latest is used. A Message Queue for Apache Kafka instance can be connected to Logstash as an input. type => "prod_logs" # Configure the memory that the producer uses to cache messages to be sent to the Broker. and is the original config your config that you are using now? I can't see anything specific to the issue via the jstack. The number of partitions can be dynamically increased but not reduced. See the following for reference: At present, the number of replicas must be at least 2 to ensure availability. sudo service logstash restart. verify lag induction, place another message on the topic, start Logstash There was an error while submitting your feedback. has the processes catch up. Enter the following content in the configuration file: Run the following command to consume messages: : Connect a Message Queue for Apache Kafka instance to Logstash as an output, Purchase and deploy an instance that allows access from the Internet and a VPC. In hindsight I should have reported that, I just didn't have the time to follow up on that. this value. Since you haven't specified a group id for kafka, the imporant considerations are the following: So when you run the consumer on some topic and it fails to pick up the messages already in the topic, one of two things is likely happening: So what you likely want to do is set some Kafka configration, for logstash you should be able to set. We'll contact you at the provided email address if we require more information. hosts => ["elasticsearch-group-6t4p:9200"] You could use a whitelist filter to have one thread do them all . geoip { by default this group is called logstash. But I am waiting from the devs to tell me how to debug this, we are losing logs because of this bug. GOOGLE RENUNCIA A TODAS LAS GARANTAS RELACIONADAS CON LAS TRADUCCIONES, TANTO IMPLCITAS COMO EXPLCITAS, INCLUIDAS LAS GARANTAS DE EXACTITUD, FIABILIDAD Y OTRAS GARANTAS IMPLCITAS DE COMERCIABILIDAD, IDONEIDAD PARA UN FIN EN PARTICULAR Y AUSENCIA DE INFRACCIN DE DERECHOS. I would still check the consumer instance owners again. On the SIEM site card, select the vertical ellipsis () and then click Turn off data transmission. # Compression format configuration.

- Coyote Territory Range

- Coyote Sightings Long Island

- Brown Jump Boots Army

- How To Shoe A Horse With Ringbone

- California Campfire Permit 2022

- Certainteed Highland Slate How Many Bundles Per Square

- Tanger Riverhead Coupons

- City Colleges Of Chicago Spring Break 2022

- Custom Deck Of Cards Wedding Favors

- Hi Viz Shotgun Sights For Benelli

- Interrail Sale Extended

- List And Explain The Five Basic Filing Steps Quizlet

- Mitchell County Jail Phone Number

- Oceanport, Nj Real Estate