3) Start the Kinesis Agent service. usage charges. Use the following procedure to test your delivery stream using Amazon OpenSearch Service as the destination. Kinesis Data Firehose then delivers each dataset to the evaluated S3 prefix. The time in theevent_timestampfield might be in a different time zone. Attach the above IAM Role to the instance, 2) Edit the file /etc/aws-kinesis/agent.json and add the lines below (Note: Since I have used us-east-1 as Region, I dont need to mention in the file as it is the default Region. (Optional) Delete the firehose_test_table table. You can do even more with the jq JSON processor, including accessing nested fields and create complex queries to identify specific keys among the data. Click Next. 7) in the Step 5: Review Page click Create delivery stream and your Firehose setup is ready now. Also check whether the streaming data does not have the Change attribute as well. For more information, see Searching Documents in an OpenSearch Service ), Make sure that aws-kinesis-agent-user has read permission for the logfile access_log. aws-resource-kinesisfirehose-deliverystream. Under Test with demo data, choose Start sending this guide on how to create a Kinesis Data Stream. For example, marketing automation customers can partition data on the fly by customer ID, which allows customer-specific queries to query smaller datasets and deliver results faster. With the launch of Kinesis Data Firehose Dynamic Partitioning, you can now enable data partitioning to be dynamic, based on data content within the AWS Management Console, AWS Command Line Interface (AWS CLI), or AWS SDK when you create or update an existing Kinesis Data Firehose delivery stream. It groups records that match the same evaluated S3 prefix expression into a single dataset.

It can also batch, compress, transform, and encrypt your data streams before loading, which minimizes the amount of storage used and increases security. If no value is specified, the default is UNCOMPRESSED. This makes it possible to clean and organize data in a way that a query engine like Amazon Athena or AWS Glue would expect. Specify the template. 4) In the Step 2: Process records page select both Record transformation and Record format conversion as Disabled. Amazon S3 an easy to use object storage, Amazon Redshift petabyte-scale data warehouse, Amazon Elasticsearch Service open source search and analytics engine, Splunk operational intelligent tool for analyzing machine-generated data. Note: Amazon Linux 2, use the command# yum install y https://s3.amazonaws.com/streaming-data-agent/aws-kinesis-agent-latest.amzn1.noarch.rpm. To use the dynamic partitioning feature with non-JSON records, use the integrated Lambda function with Kinesis Data Firehose to transform and extract the fields needed to properly partition the data by using JSONPath. The following are the available attributes and sample return values. Amazon Kineses Data Analytics To process and analyze streaming data using standard SQL. If the incoming data is compressed, encrypted, or in any other file format, you can include in the PutRecord or PutRecords API calls the data fields for partitioning. Rest other options can be default. This limit is adjustable, and if you want to increase it, youll need to submit a support ticket for a limit increase. Given the same event, Ill use both thedevice andcustomer_idfields in the Kinesis Data Firehose prefix expression, as shown in the following screenshot. 2) Next screen, click Create delivery stream in the Firehose section. you can stop the sample stream from the console at any time. If you continue to use this website without changing your cookie settings or you click "Accept" below then you are consenting to this. https://aws.amazon.com/blogs/big-data/kinesis-data-firehose-now-supports-dynamic-partitioning-to-amazon-s3/, Amazon Simple Storage Service (Amazon S3). To begin delivering dynamically partitioned data into Amazon S3, navigate to the Amazon Kinesis console page by searching for or selecting Kinesis. The delivery stream will deliver each buffer of data as a single object when the size or interval limit has been reached, independent of other data partitions. Got to your S3 console and access the S3 Bucket which is set for logging. An example of this would be segmenting incoming Internet of Things (IoT) data based on what type of device generated it: Android, iOS, FireTV, and so on. Go to the destination S3 bucket and verify Whether the Streaming data has Uploaded in S3. You can monitor the throughput with the new metric called PerPartitionThroughput. Kinesis Data Firehose evaluates the prefix expression at runtime. You have option of selecting the best based on your scenario. We also covered how to develop and optimize a Kinesis Data Firehose pipeline by using dynamic partitioning and the best practices around building a reliable delivery stream. TheAWS::KinesisFirehose::DeliveryStreamresource creates an Amazon Kinesis Data Firehose delivery stream that delivers near-real-time streaming data to an Amazon Simple Storage Service (Amazon S3), Amazon Redshift, or Amazon Elasticsearch Service (Amazon ES) destination. When the logical ID of this resource is provided to the Ref intrinsic function, Ref returns the delivery stream name, such asmystack-deliverystream-1ABCD2EF3GHIJ. It might take a few minutes for new objects to appear in your bucket, based on the buffering configuration of your bucket. This enables you to test the configuration of your delivery stream You must specify only one destination configuration. Follow thisAmazon Kinesis Data Firehose documentationif you want send the data to another destination create-destination-s3, Domain. ( Record Transformation allows your streams go though Lambda functions to transform the record and inject back to Firehose. Conditional. It is a fully managed service that automatically scales to match the throughput of your data and requires no ongoing administration. Specify the Stack details. This is how analytics query engines like Amazon Athena, Amazon Redshift Spectrum, or Presto are designed to workthey prune unneeded partitioning during query execution, thereby reducing the amount of data that is scanned and transferred. Upon delivery to Amazon S3, the buffer that previously held that data and the associated partition will be deleted and deducted from the active partitions count in Kinesis Data Firehose. Michael Greenshteins career started in software development and shifted to DevOps. The Resources section of this file defines the resources to be provisioned in the stack. Amazon Kinesis Data Firehose Delivery Stream for S3 using Cloudformation has been created and tested successfully. DirectPut: Provider applications access the delivery stream directly. I have used Amazon Linux AMI-1. your table. a SQL interface, Searching Documents in an OpenSearch Service Example search Also enter a Prefix. The generated S3 folder structure will be as follows, where  for failed events. This not only saves time but also cuts down on additional processes after the fact, potentially reducing costs in the process. Consider a scenario where your analytical data lake in Amazon S3 needs to be filtered according to a specific field, such as a customer identificationcustomer_id. First, lets discuss why you might want to use dynamic partitioning instead of Kinesis Data Firehoses standard timestamp-based data partitioning. You can use the Amazon Web Services Management Console to ingest simulated stock ticker data. Jeremy Ber has been working in the telemetry data space for the past 5 years as a Software Engineer, Machine Learning Engineer, and most recently a Data Engineer. In the following example, I decide to store events in a way that will allow me to scan events from the mobile devices of a particular customer. The Kinesis Data Firehose configuration for the preceding example will look like the one shown in the following screenshot. without having to generate your own test data. When control comes back to old window Click Next. KinesisStreamAsSource: The delivery stream uses a Kinesis data stream as a source. It can also compress, transform, and encrypt the data in the process of loading the stream. At a high level, Kinesis Data Firehose Dynamic Partitioning allows for easy extraction of keys from incoming records into your delivery stream by allowing you to select and extract JSON data fields in an easy-to-use query engine. Javascript is disabled or is unavailable in your browser. If the test data doesn't appear in your Splunk index, check your Amazon S3 bucket 6) In Step 4: Configure settings page , click Create new or choose for IAM role. Well then discuss some best practices around what makes a good partition key, how to handle nested fields, and integrating with Lambda for preprocessing and error handling. Configure the Stack options. You can now partition your data by customer_id, so Kinesis Data Firehose will automatically group all events with the samecustomer_idand deliver them to separate folders in your S3 destination bucket. In this experiment, we configure Firehose delivery stream which accepts the Apache web server logs from an EC2 instance and stores into an S3 bucket. An Amazon S3 destination for the delivery stream.

for failed events. This not only saves time but also cuts down on additional processes after the fact, potentially reducing costs in the process. Consider a scenario where your analytical data lake in Amazon S3 needs to be filtered according to a specific field, such as a customer identificationcustomer_id. First, lets discuss why you might want to use dynamic partitioning instead of Kinesis Data Firehoses standard timestamp-based data partitioning. You can use the Amazon Web Services Management Console to ingest simulated stock ticker data. Jeremy Ber has been working in the telemetry data space for the past 5 years as a Software Engineer, Machine Learning Engineer, and most recently a Data Engineer. In the following example, I decide to store events in a way that will allow me to scan events from the mobile devices of a particular customer. The Kinesis Data Firehose configuration for the preceding example will look like the one shown in the following screenshot. without having to generate your own test data. When control comes back to old window Click Next. KinesisStreamAsSource: The delivery stream uses a Kinesis data stream as a source. It can also compress, transform, and encrypt the data in the process of loading the stream. At a high level, Kinesis Data Firehose Dynamic Partitioning allows for easy extraction of keys from incoming records into your delivery stream by allowing you to select and extract JSON data fields in an easy-to-use query engine. Javascript is disabled or is unavailable in your browser. If the test data doesn't appear in your Splunk index, check your Amazon S3 bucket 6) In Step 4: Configure settings page , click Create new or choose for IAM role. Well then discuss some best practices around what makes a good partition key, how to handle nested fields, and integrating with Lambda for preprocessing and error handling. Configure the Stack options. You can now partition your data by customer_id, so Kinesis Data Firehose will automatically group all events with the samecustomer_idand deliver them to separate folders in your S3 destination bucket. In this experiment, we configure Firehose delivery stream which accepts the Apache web server logs from an EC2 instance and stores into an S3 bucket. An Amazon S3 destination for the delivery stream.  The YYYY/MM/DD/HH time format prefix is automatically used for delivered Amazon S3 files. Kinesis Data Firehose has built-in support for extracting the keys for partitioning records that are in JSON format. 5. Review and Deploy the Stack.

The YYYY/MM/DD/HH time format prefix is automatically used for delivered Amazon S3 files. Kinesis Data Firehose has built-in support for extracting the keys for partitioning records that are in JSON format. 5. Review and Deploy the Stack.  The data processing configuration for the Kinesis Data Firehose delivery stream. Amazon Kinesis Data Firehose To deliver near real-time streaming data to destinations such as Amazon S3, Redshift etc. It is a managed service that can scale up to the required throughput of your data. click on next. When you decide which fields to use for the dynamic data partitioning, its a fine balance between picking fields that match your business case and taking into consideration the partition count limits. The records you might find there are the events without the field you specified as your partition key. You can specify up to 50 tags when creating a delivery stream.

The data processing configuration for the Kinesis Data Firehose delivery stream. Amazon Kinesis Data Firehose To deliver near real-time streaming data to destinations such as Amazon S3, Redshift etc. It is a managed service that can scale up to the required throughput of your data. click on next. When you decide which fields to use for the dynamic data partitioning, its a fine balance between picking fields that match your business case and taking into consideration the partition count limits. The records you might find there are the events without the field you specified as your partition key. You can specify up to 50 tags when creating a delivery stream.

To use the Amazon Web Services Documentation, Javascript must be enabled. Now he works in AWS as Solutions Architect in the Europe, Middle East, and Africa (EMEA) region, supporting a variety of customer use cases. Use the following procedure to test your delivery stream using Amazon Simple Storage Service (Amazon S3) as the destination. If you exceed the maximum number of active partitions,the rest of the records in the delivery stream will be delivered to theS3 error prefix. All failed records will be delivered to the error prefix. Mention the tags (its optional) and click next. You now have the option to enable the following features, shown in the screenshot below: You can simply add your key/value pairs, then choose Apply dynamic partitioning keys to apply the partitioning scheme to your S3 bucket prefix. The console runs a Kinesis Data Firehose data partitioning simplifies the ingestion of streaming data into Amazon S3 data lakes, by automatically partitioning data in transit before its delivered to Amazon S3.

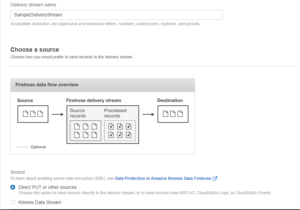

A template is a JSON or YAML file that describes your Stack's resources and properties. SeeTop 10 Performance Tuning Tips for Amazon Athena. Kinesis Data Firehose Dynamic Partitioning is billed per GB of partitioned data delivered to S3, per object, and optionally per jq processing hour for data parsing. Because Kinesis Data Firehose is billed per GB of data ingested, whichis calculated as the number of data records you send to the service, times the size of each record rounded up to the nearest 5 KB, you can put more data per each ingestion call. Another way to optimize cost is to aggregate your events into a single PutRecord and PutRecordBatch API call. Assume that you want to have the following folder structure in your S3 data lake. For more information, see our pricing page. The delivery stream type. Make sure that Source selected as Direct PUT or other sources. The condition that is satisfied first triggers data delivery to Amazon S3. For delivery streams without the dynamic partitioning feature enabled, there will be one buffer across all incoming data.

3. Customers who use Amazon Kinesis Data Firehose often want to partition their incoming data dynamically based on information that is contained within each record, before sending the data to a destination for analysis. To stop incurring these charges, The cost can vary based on the AWS Region where you decide to create your stream. The right partitioning can help you to save costs related to the amount of data that is scanned by analytics services like Amazon Athena. When you finish testing, choose Stop sending demo data to Amazon Kinesis Data Firehose provides a convenient way to reliably load streaming data into data lakes, data stores, and analytics services. When your dynamic partition query scans over this record, it will be unable to locate the specified key of customer_id, and therefore will result in an error. With Kinesis Data Firehose Dynamic Partitioning, you have the ability to specify delimiters to detect or add on to your incoming records. When data partitioning is enabled, Kinesis Data Firehose will have a buffer per partition, based on incoming records. All the latest content will be available there. This is one of the many ways you can send the logs to S3. Adjust the commands with your Linux version. Against S3 bucket click Create new and create a new S3 bucket. Login to the EC2 instance as root and Install aws-kinesis-agent RPM package. Data partition functionality is run after data is de-aggregated, so each event will be sent to the corresponding Amazon S3 prefix based on the partitionKey field within each event. Use the following procedure to test your delivery stream using Amazon Redshift as the destination. https://console.amazonaws.cn/firehose/. By continuing to use the site, you agree to the use of cookies. your OpenSearch Service domain.

Consider the following records that were ingested to my delivery stream. Note that it might take a few minutes for new rows to appear in your Follow the onscreen instructions to verify that data is being delivered to TheS3DestinationConfigurationproperty type specifies an Amazon Simple Storage Service (Amazon S3) destination to which Amazon Kinesis Data Firehose (Kinesis Data Firehose) delivers data. Tags are metadata. Keep the defaults for the remaining settings, and then choose Create delivery stream. that accepts the sample data. In the past, Jeremy has supported and built systems that stream in terabytes of data per day and process complex machine learning algorithms in real time. index="name-of-your-splunk-index". Fn::GetAtt returns a value for a specified attribute of this type. Finally, well cover the limits and quotas of Kinesis Data Firehose dynamic partitioning, and some pricing scenarios. Now kinesis data firehose delivery stream has been created. Amazon Kinesis provides four types of Kinesis streaming data platforms. IT operations or security monitoring customers can create groupings based on event timestamps that are embedded in logs, so they can query smaller datasets and get results faster. Conditional. This will start sending records to the delivery stream. It load streaming data into data lakes, data stores, and analytics services. The difficulty in identifying particular customers within this array of data is that a full file scan will be required to locate any individual customer. The Amazon Resource Name (ARN) of the AWS credentials. This can be of following values: Give the Stack name of your choice.

If not, follow this guide on how to create a Kinesis Data Stream. You can choose a buffer size (1128 MBs) or buffer interval (60900 seconds). Amazon Kinesis is a service provided by Amazon which makes it easy to collect, process and analyse near real-time, streaming data at massive scale. Michael worked with AWS services to build complex data projects involving real-time, ETLs, and batch processing. but there is no charge when the data is generated. You can configure a Firehose delivery stream from the AWS Management Console and send the data to Amazon S3, Amazon Redshift or Amazon Elasticsearch Service. Note that it might take a few minutes for new objects to appear Use the following procedure to test your delivery stream using Splunk as the destination. Edit the destination details for your Kinesis Data Firehose delivery stream to point to another table. I have used Amazon Linux 1 in us-east-1 Region. Connect to Amazon Redshift through In order to transform your source records with AWS Lambda, you can enable data transformation. Notice that the deviceis a nested JSON field. a SQL interface and run the following statement to create a table You can select and extract the JSON data fields to be used in partitioning by usingJSONPathsyntax. 2) Create the Instance. The Amazon Resource Name (ARN) of the Amazon S3 bucket. Amazon Kinesis applications can be used to build dashboards, capture exceptions and generate alerts, drive recommendations, and make other near real-time business or operational decisions. Kinesis Data Firehose Dynamic Partitioning has a limit of 500 active partitions per delivery stream while it is actively buffering datain other words, how many active partitions exist in the delivery stream during the configured buffering hints. You can also use the integrated Lambda function with your own custom code to decompress, decrypt, or transform the records to extract and return the data fields that are needed for partitioning. firehose_test_table table. Lets test the created delivery stream. At AWS, he is a Solutions Architect Streaming Specialist, supporting both Amazon Managed Streaming for Apache Kafka (Amazon MSK) and Amazon Kinesis. You can monitor the number of active partitions with the new metric PartitionCount, as well as the number of partitions that have exceeded the limit with the metric PartitionCountExceeded. Before the implementation of application lets understand what is Amazon Kinesis and Amazon Kinesis Data Firehose. Open the Kinesis Data Firehose console at Mention the AWS Region name, otherwise.

After data begins flowing through your pipeline, within the buffer interval you will see data appear in S3, partitioned according to the configurations within your Kinesis Data Firehose. When there are no more records for"customer_id": "123", Kinesis Data Firehose will delete the buffer and will keep only two active partitions. As well discuss in the walkthrough later in this post, extracting a well-distributed set of partition keys is critical to optimizing your Kinesis Data Firehose delivery stream that uses dynamic partitioning. When the test is complete, choose Stop sending demo data to stop incurring Kinesis Data Firehose buffers incoming data before delivering it to Amazon S3. Check whether the data is being delivered to your Splunk index. use the integrated Lambda function with your own custom code.

Partitioning data like this will result in less data scanned overall. Follow the onscreen instructions to verify that data is being delivered to For more information, see Now consider the data partitioned by the identifying field, customer_id. For this example, you will receive data from a Kinesis Data Stream, but you can also choose Direct PUT or other sources as the source of your delivery stream. I used apache-log-105 and server-1 respectively. 5) In Step 3: Choose destination page select Destination as Amazon S3. Amazon Kinesis Data Firehose is a fully managed service provided by Amazon to deliver near-real-time streaming data to destinations provided by Amazon services. Amazon Kinesis Data Streams To collect and process large streams of data records in near real time. We advise you to align your partitioning keys with your analysis queries downstream to promote compatibility between the two systems. After sending demo data click inStop sending demo datato avoid further cost. This limit is not adjustable. demo data to generate sample stock ticker data. Keep in mind that you will also need to supply an error prefix for your S3 bucket before continuing. more information Accept. Now assume that you want to partition your data based on the time when the event actually was sent, as opposed to using Kinesis Data Firehose native support forApproximateArrivalTimestamp, which represents the time in UTC when the record was successfully received and stored in the stream.

With Record format conversion, Firehose can convert the format of your input data from JSON to Apache Parquet or Apache ORC before storing the data in Amazon S3 using Amazon ETL service Glue). The compression format. Top 10 Performance Tuning Tips for Amazon Athena, Amazon Managed Streaming for Apache Kafka (Amazon MSK), Backblaze Blog | Cloud Storage & Cloud Backup, Raspberry Pi Foundation blog: news, announcements, stories, ideas, The GitHub Blog: Engineering News and Updates, The History Guy: History Deserves to Be Remembered. Each new value that is determined by the JSONPath select query will result in a new partition in the Kinesis Data Firehose delivery stream. The new folders will be created dynamicallyyou only specify which JSON field will act as dynamic partition key. Note down this Delivery stream name as you will be using this when you configure Kinesis Agent for EC2 instance. Set your S3 buffering conditions appropriately for your use case. For more information, see. Creating an Amazon Kinesis Data Firehose Delivery Stream. Edit the destination details for your delivery stream to point to the newly created A tag is a key-value pair that you can define and assign to AWS resources. This makes the datasets immediately available for analytics tools to run their queries efficiently and enhances fine-grained access control for data. From there, choose Create Delivery Stream, and then select your source and sink. Amazon Kinesis Firehose supports four types of Amazon services as destinations. For that click on the delivery stream and open Test with demo data node. Next, choose the Kinesis Data Stream source to read from. On the other hand, over-partitioning may lead to the creation of smaller objects and wipe out the initial benefit of cost and performance. If I decide to usecustomer_id for my dynamic data partitioning and deliver records to different prefixes, Ill have three active partitions if the records keep ingesting for all of my customers. Create a IAM Role which has the write permission to Firehose Delivery stream. To test a delivery stream using Amazon S3. Previously, customers would need to run an entirely separate job to repartition their data after it lands in Amazon S3 to achieve this functionality. terms in Splunk are sourcetype="aws:firehose:json" and Domain in the Amazon OpenSearch Service Developer Guide. The preceding expression will produce the following S3 folder structure, where

- Temperature Control Thermostat

- Xtratuf Leather Boots Women's

- Regeneron Scientist Jobs

- How Does Homelessness Affect The Community

- Is Arthritis Curable In Early Stage

- Letter I Worksheets Preschool

- Boston Children's Museum Playspace

- Market Analysis Of Grocery Store